尝试使用kubeadm安装k8s

kubeadm安装控制面

机器配置

# 关闭swap

swapoff -a # 临时关闭

sed -i '/.*swap.*/d' /etc/fstab # 永久关闭,下次开机生效

# 加载内核模块

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# sysctl params required by setup, params persist across reboots

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# Apply sysctl params without reboot

sudo sysctl --system

安装containerd

当前版本为1.7.2

wget https://github.com/containerd/containerd/releases/download/v1.7.2/containerd-1.7.2-linux-amd64.tar.gz -O /tmp/containerd.tar.gz

tar -zxvf /tmp/containerd.tar.gz -C /usr/local

containerd -v # 1.7.2

## runc

wget https://github.com/opencontainers/runc/releases/download/v1.1.7/runc.amd64 -O /tmp/runc.amd64

install -m 755 /tmp/runc.amd64 /usr/local/sbin/runc

## cni

wget https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz -O /tmp/cni-plugins-linux-amd64-v1.3.0.tgz

mkdir -p /opt/cni/bin

tar Cxzvf /opt/cni/bin /tmp/cni-plugins-linux-amd64-v1.3.0.tgz

## 生成containerd配置文件

mkdir -p /etc/containerd

containerd config default > /etc/containerd/config.toml

## 使用Systemd作为cggroup驱动

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

## 使用阿里云镜像的sandbox,和下面的kubeadm init --image-repository镜像保持一致,否则kubeadm init时控制面启动失败

sed -i 's/sandbox_image.*/sandbox_image = "registry.aliyuncs.com\/google_containers\/pause:3.9"/' /etc/containerd/config.toml

## 从/etc/containerd/config.toml的disabled_plugins中去掉cri

## systemd服务

wget https://raw.githubusercontent.com/containerd/containerd/main/containerd.service -O /lib/systemd/system/containerd.service

修改containerd.service的代理配置,否则镜像都拉不下来,calico网络插件也装不了

vim /lib/systemd/system/containerd.service

# 在[Service]块中增加代理配置

# NO_PROXY中

# 10.96.0.0/16是kubeadm init --service-cidr的默认地址

# 192.168.0.0/16是kubeadmin init --pod-network-cidr我们填入的地址,也是calico网络插件工作的地址

Environment="HTTP_PROXY=http://127.0.0.1:3128/"

Environment="HTTPS_PROXY=http://127.0.0.1:3128/"

Environment="NO_PROXY=192.168.0.0/16,127.0.0.1,10.0.0.0/8,172.16.0.0/12,localhost"

启动containerd服务

systemctl daemon-reload

systemctl enable --now containerd

安装crictl

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.27.0/crictl-v1.27.0-linux-amd64.tar.gz -O /tmp/crictl-v1.27.0-linux-amd64.tar.gz

tar -zxvf /tmp/crictl-v1.27.0-linux-amd64.tar.gz -C /tmp

install -m 755 /tmp/crictl /usr/local/bin/crictl

crictl --runtime-endpoint=unix:///run/containerd/containerd.sock version

安装kubtelet kubeadm kubectl

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

## 关闭selinux

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

sudo yum install -y kubectl-1.27.4 kubeadm-1.27.4 kubectl-1.27.4 --disableexcludes=kubernetes #指定版本安装

sudo systemctl enable --now kubelet # 启动kubelet服务,但是会一直重启,这是正常的

kubelet --version # Kubernetes v1.27.3

kubectl version --short # Client Version: v1.27.3

初始化控制面节点

控制面节点是控制面组件运行的地方,包括etcd和api server。是kubectl打交道的地方.

# echo $(ip addr|grep "inet " |awk -F "[ /]+" '{print $3}'|grep -v "127.0.0.1") $(hostname) >> /etc/hosts

# echo 127.0.0.1 $(hostname) >> /etc/hosts

# kubeadm config print init-defaults --component-configs KubeletConfiguration > /etc/kubernetes/init-default.yaml

# sed -i 's/imageRepository: registry.k8s.io/imageRepository: registry.aliyuncs.com\/google_containers/' /etc/kubernetes/init-default.yaml

# sed -i 's/criSocket: .*/criSocket: unix:\/\/\/run\/containerd\/containerd.sock/' /etc/kubernetes/init-default.yaml

# sed -i 's/cgroupDriver: .*/cgroupDriver: systemd/' /etc/kubernetes/init-default.yaml

# # 将advertiseAddress改成实际地址

# kubeadm config images pull --config /etc/kubernetes/init-default.yaml

# kubeadm init --config /etc/kubernetes/init-default.yaml

echo $(ip addr|grep "inet " |awk -F "[ /]+" '{print $3}'|grep -v "127.0.0.1") $(hostname) >> /etc/hosts

kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers

kubeadm init --control-plane-endpoint=$(hostname) --pod-network-cidr=192.168.0.0/16 --image-repository registry.aliyuncs.com/google_containers --cri-socket unix:///run/containerd/containerd.sock

watch crictl --runtime-endpoint=unix:///run/containerd/containerd.sock ps -a

# 如果执行有问题,就kubeadm reset重新进行kubeadm init

设置kube config

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get cs # 使用kubectl与集群交互

让其他节点加入集群:我这里只用控制面了,就不操作了

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join mi:6443 --token 9j2kbw.05vncqjs7bntu4wu \

--discovery-token-ca-cert-hash sha256:7c5d6e9360110ffb1784601a06043c9467e054283e851909d70161d36e9f08ef #--ignore-preflight-errors=all

安装网络插件,解决node not ready

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

node NotReady control-plane 49m v1.27.3

$ kubectl describe nodes node|grep KubeletNotReady

Ready False Wed, 19 Jul 2023 22:52:58 +0800 Wed, 19 Jul 2023 22:06:46 +0800 KubeletNotReady container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:Network plugin returns error: cni plugin not initialized

下面安装Calico网络插件,前提是 --pod-network-cidr=192.168.0.0/16,并且containerd正确设置了代理,否则下载不了Calico

# 创建tigera operator

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/tigera-operator.yaml

# 创建Calico网络插件

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/custom-resources.yaml

watch kubectl get pods -n calico-system # 两秒刷新一次,直到所有Calico的pod变成running

下面是安装flannel网络插件,和Calico网络插件选一个即可

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml -O kube-flannel.yml

sed -i 's/10.244.0.0\/16/192.168.0.0\/16/' kube-flannel.yml

for i in $(grep "image: " kube-flannel.yml | awk -F '[ "]+' '{print $3}'|uniq); do

echo 下载 $i

crictl --runtime-endpoint=unix:///run/containerd/containerd.sock pull ${i}

done

kubectl apply -f kube-flannel.yml

watch kubectl get pod -n kube-flannel

让控制面节点也能调度pod

kubectl taint nodes --all node-role.kubernetes.io/control-plane-

kubectl taint nodes --all node-role.kubernetes.io/master-

检验dns正确

kubectl run curl --image=radial/busyboxplus:curl -it --rm

nslookup kubernetes.default

在控制面节点上跑一个nginx的pod

kubectl apply -f https://k8s.io/examples/pods/simple-pod.yaml

watch kubectl get pods -o wide # 显示nginx的pod正Running在192.168.254.8上

curl 192.168.254.8

kubectl delete pod nginx # 删除这个pod

常用组件安装

装个我的代理

cat > proxy.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: proxy-deployment

spec:

replicas: 1 # tells deployment to run 2 pods matching the template

selector:

matchLabels:

kubernetes.io/os: linux

template:

metadata:

labels:

kubernetes.io/os: linux

spec:

# 因为使用hostNetwork模式,最好限定好nodeName

# nodeSelector:

# kubernetes.io/hostname: mi

containers:

- image: arloor/rust_http_proxy:1.0

imagePullPolicy: Always

name: proxy

env:

- name: port

value: "443"

- name: basic_auth

value: "Basic xxxxx=="

- name: ask_for_auth

value: "false"

- name: over_tls

value: "true"

- name: raw_key

value: "/pems/arloor.dev.key"

- name: cert

value: "/pems/fullchain.cer"

- name: web_content_path

value: "/web_content_path"

volumeMounts:

- mountPath: /pems

name: pems

- mountPath: /web_content_path

name: content

restartPolicy: Always

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

volumes:

- name: pems

hostPath:

path: /root/.acme.sh/arloor.dev

type: Directory

- name: content

hostPath:

path: /usr/share/nginx/html/blog

type: Directory

EOF

kubectl apply -f proxy.yaml

watch kubectl get pod

helm包管理器

wget https://get.helm.sh/helm-v3.12.0-linux-amd64.tar.gz -O /tmp/helm-v3.12.0-linux-amd64.tar.gz

tar -zxvf /tmp/helm-v3.12.0-linux-amd64.tar.gz -C /tmp

mv /tmp/linux-amd64/helm /usr/local/bin/

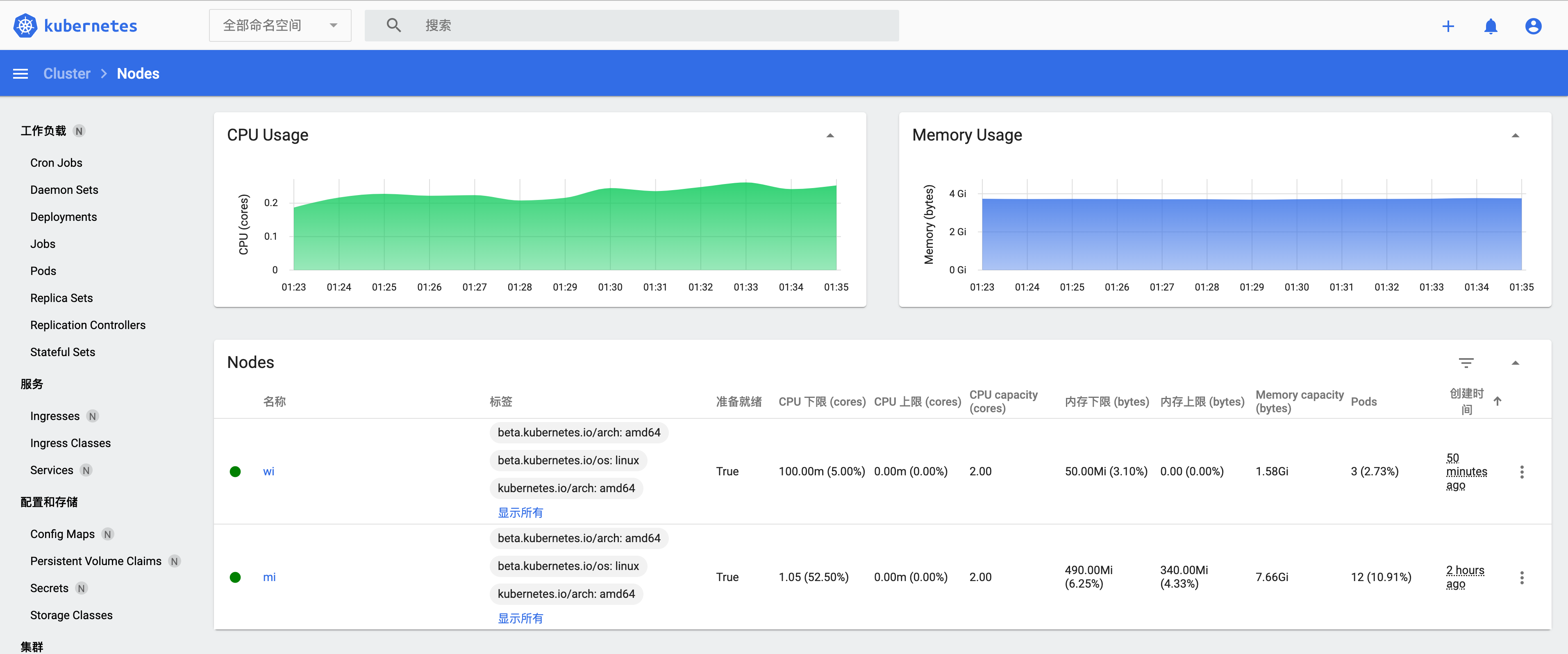

metric server

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.3/components.yaml

修改 components.yaml 中容器的启动参数,加入 --kubelet-insecure-tls 。

for i in $(grep "image: " components.yaml | awk -F '[ "]+' '{print $3}'|uniq); do

echo 下载 $i

crictl --runtime-endpoint=unix:///run/containerd/containerd.sock pull ${i}

done

crictl --runtime-endpoint=unix:///run/containerd/containerd.sock images|grep registry.k8s.io

kubectl apply -f components.yaml

watch kubectl get pod -n kube-system

kubectl get service -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.97.175.254 <pending> 80:30873/TCP,443:31834/TCP 4m42s

ingress-nginx-controller-admission ClusterIP 10.99.75.35 <none> 443/TCP 4m42s

metrics-server的pod正常启动后,等一段时间就可以使用kubectl top查看集群和pod的metrics信息。

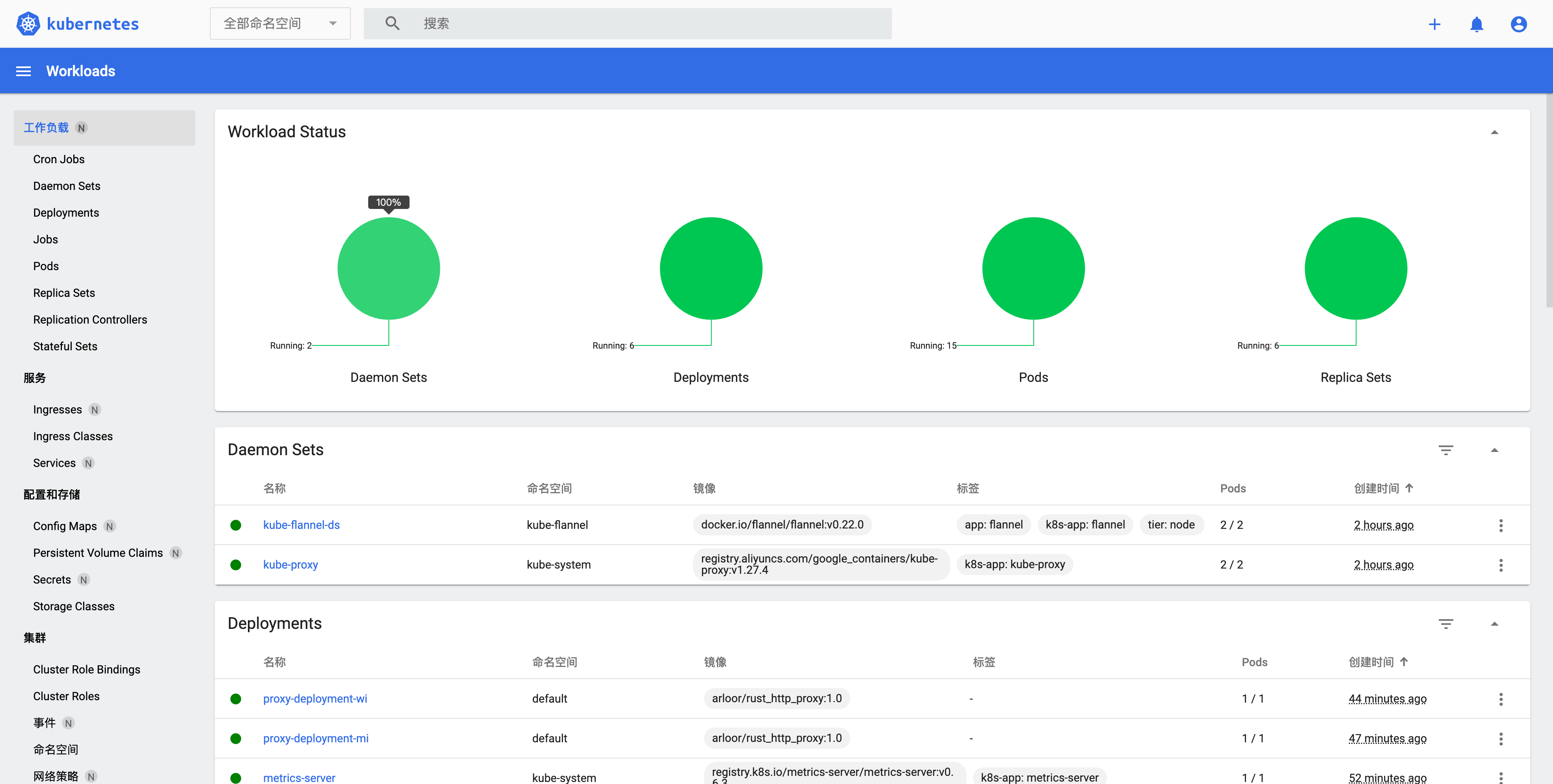

kubernetes dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml -O dashboard.yaml

修改dashboard.yaml成hostNetWork: 参考K8S Dashboard安裝/操作

- Service/kubernetes-dashboard的spec中增加 type: NodePort

Deployment/dashboard-metrics-scraper最后一行增加hostNetwork: true、dnsPolicy: ClusterFirstWithHostNet 和containers:并排- 在args中增加 - –token-ttl=43200 将token过期时间改为12小时

kubectl apply -f dashboard.yaml

watch kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

dashboard-metrics-scraper ClusterIP 10.106.143.149 <none> 8000/TCP 53s k8s-app=dashboard-metrics-scraper

kubernetes-dashboard NodePort 10.97.248.169 <none> 443:31611/TCP 53s k8s-app=kubernetes-dashboard

443:31611/TCP 表示我们可以通过外网ip:31611来访问dashboard,快速得到这个端口可以用下面的命令

kubectl get svc -n kubernetes-dashboard |grep NodePort|awk -F '[ :/]+' '{print $6}'

生成访问token: 参考creating-sample-user

kubectl apply -f - <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

打印port,并生成token,随后通过token访问 https://ip/31611 即可使用dashboard。

cat > /usr/local/bin/token <<\EOF

echo Port: `kubectl get svc -n kubernetes-dashboard |grep NodePort|awk -F '[ :/]+' '{print $6}'`

echo

echo Token:

kubectl -n kubernetes-dashboard create token admin-user

EOF

chmod +x /usr/local/bin/token

token

参考文档

- install-kubeadm

- create-cluster-kubeadm

- containerd get started

- kubernetes新版本使用kubeadm init的超全问题解决和建议

- calico quick start

- containerd设置代理

- 工作负载pods

- 工作负载deployment

- 使用kubeadm部署Kubernetes 1.27

- ingress-nginx deploy

- ingress-nginx 更改地址

- ingress-nginx custom-listen-ports

- 跨云厂商部署 k3s 集群

- 使用 WireGuard 作为 Kubernetes 的 CNI 插件(打通云厂商)

- Kubernetes 入门到实践:借助 WireGuard 跨云搭建 K3s 集群环境

- K3S+Rancher2.x跨Region搭建方案

- K3s vs K8s:轻量级和全功能的对决

- 探索 K3s 简单高效的多云和混合云部署

其他

metal lb

metallb-native.yaml

wget https://raw.githubusercontent.com/metallb/metallb/v0.13.10/config/manifests/metallb-native.yaml -O metallb-native.yaml

for i in $(grep "image: " metallb-native.yaml | awk -F '[ "]+' '{print $3}'|uniq); do

echo 下载 $i

crictl --runtime-endpoint=unix:///run/containerd/containerd.sock pull ${i}

done

kubectl apply -f metallb-native.yaml

cat > l2.yaml <<EOF

---

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: default

namespace: metallb-system

spec:

addresses:

- 10.0.4.100-10.0.4.200

autoAssign: true

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: default

namespace: metallb-system

spec:

ipAddressPools:

- default

EOF

kubectl apply -f l2.yaml

ingress-nginx并通过hostNetwork暴露18080和1443端口

wget https://github.com/kubernetes/ingress-nginx/releases/download/helm-chart-4.7.1/ingress-nginx-4.7.1.tgz

helm show values ingress-nginx-4.7.1.tgz > values.yaml # 查看可以配置的value

修改values.yaml:改成使用hostNetwork,并且修改containerPort为非常用端口。我们的环境没有LoadBalencer,所以要用hostNetwork

containerPort:

http: 18080

https: 1443

....

hostNetwork: true

## 预下载registry.k8s.io的镜像

helm template ingress-nginx-4.7.1.tgz -f values.yaml > ingress-nginx-deploy.yaml

for i in $(grep "image: " ingress-nginx-deploy.yaml | awk -F '[ "]+' '{print $3}'|uniq); do

echo 下载 $i

crictl --runtime-endpoint=unix:///run/containerd/containerd.sock pull ${i}

done

crictl --runtime-endpoint=unix:///run/containerd/containerd.sock images|grep registry.k8s.io

systemctl disable rust_http_proxy --now #关闭所有占用80、443端口的服务

helm install ingress-nginx ingress-nginx-4.7.1.tgz --create-namespace -n ingress-nginx -f values.yaml

# helm install ingress-nginx ingress-nginx-4.7.1.tgz --create-namespace -n ingress-nginx -f values.yaml

watch kubectl get pods -o wide -n ingress-nginx

kubectl get services -o wide

kubectl get controller -o wide

修改端口

$ kubectl edit deployment release-name-ingress-nginx-controller # 不知道values.yaml里的extraArgs有用吗

/ -- 搜索,然后修改:

spec:

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/release-name-ingress-nginx-controller

- --election-id=release-name-ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/release-name-ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

## 增加以下端口设置

- --http-port=18080

- --https-port=1443

$ kubectl delete pod release-name-ingress-nginx-controller-5c65485f4c-lnm2r #删除这个deployment的老pod,就会创建新的pod

systemctl enable rust_http_proxy --now #开启原来的那些服务

curl http://xxxx:18080 # 404即成功

NodePort方式安装Ingress Nginx

wget -O ingress-nginx.yaml https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.8.1/deploy/static/provider/baremetal/deploy.yaml

for i in $(grep "image: " ingress-nginx.yaml | awk -F '[ "]+' '{print $3}'|uniq); do

echo 下载 $i

crictl --runtime-endpoint=unix:///run/containerd/containerd.sock pull ${i}

done

kubectl apply -f ingress-nginx.yaml

watch kubectl get service -A

问题: 在做local-testing创建ingress时,连接不到admission。

$ kubectl create deployment demo --image=httpd --port=80

$ kubectl expose deployment demo

$ kubectl create ingress demo-localhost --class=nginx \

--rule="demo.localdev.me/*=demo:80"

error: failed to create ingress: Internal error occurred: failed calling webhook "validate.nginx.ingress.kubernetes.io": failed to call webhook: Post "https://ingress-nginx-controller-admission.ingress-nginx.svc:443/networking/v1/ingresses?timeout=10s": EOF

测试了下dns

$ kubectl run curl --image=radial/busyboxplus:curl -it

$ nslookup ingress-nginx-controller-admission.ingress-nginx.svc # dns是通的

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: ingress-nginx-controller-admission.ingress-nginx.svc

Address 1: 10.108.175.87 ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local

$ curl https://ingress-nginx-controller-admission.ingress-nginx.svc:443/networking/v1/ingresses?timeout=10s

curl: (6) Couldn't resolve host 'ingress-nginx-controller-admission.ingress-nginx.svc'

$ curl https://ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local:443/networking/v1/ingresses?timeout=10s -k -v

* SSLv3, TLS handshake, Client hello (1):

* SSLv3, TLS handshake, Server hello (2):

* SSLv3, TLS handshake, CERT (11):

* SSLv3, TLS handshake, Server key exchange (12):

* SSLv3, TLS handshake, Server finished (14):

* SSLv3, TLS handshake, Client key exchange (16):

* SSLv3, TLS change cipher, Client hello (1):

* SSLv3, TLS handshake, Finished (20):

* SSLv3, TLS change cipher, Client hello (1):

* SSLv3, TLS handshake, Finished (20):

> GET /networking/v1/ingresses?timeout=10s HTTP/1.1

> User-Agent: curl/7.35.0

> Host: ingress-nginx-controller-admission.ingress-nginx.svc.cluster.local

> Accept: */*

>

< HTTP/1.1 400 Bad Request

< Date: Fri, 21 Jul 2023 03:02:07 GMT

< Content-Length: 0

<

类似的问题在 kubernetes.default 也一样

[ root@curl:/ ]$ nslookup kubernetes.default

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

[ root@curl:/ ]$ curl https://kubernetes.default -k

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {},

"code": 403

[ root@curl:/ ]$ curl https://kubernetes.default.svc -k

curl: (6) Couldn't resolve host 'kubernetes.default.svc'

[ root@curl:/ ]$ curl https://kubernetes.default.svc.cluster.local -k

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {},

"code": 403

}